Screening simulations

These simulations involved increasing the channel and floodplain roughness along one reach at a time to simulate the adding of a CRIM (debris dam and floodplain vegetation) and looking at the impact this has on river discharge just upstream of Uckfield.

-NOTE- I will add some images showing some of my results in the next update, which I'll add after this.

Results have been produced for all rain time maps (1mm/day - 200mm/day). A potential problem I have is in deciding on an appropriate time map to use, as discussed in my previous update. It was mentioned that goodness-of-fit statistics can be misleading. Therefore I decided to look at the effect of CRIMs where time maps 30, 36, 42 and 50mm/day were applied.

- NOTE - The model may be calibrated with different time maps throughout the storm period.

My choice was based on what was felt to produce the best hydrograph when initially compared to the observed hydrgraph. As discussed previously, the hydrograph produced when using a 30mm/day time map shows an encouragingly good qualitative fit, albeit with a time lag.

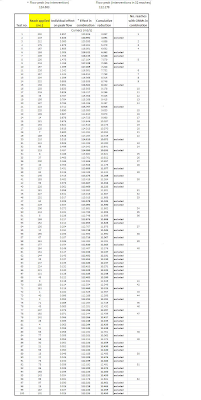

Table 1. The number of reaches in the Uck catchment where, if applied to 1 individual reach, a CRIM would decrease flood peak by more than 5, 1 and 0.1 cumecs.

Table 1 shows some of my initial results. It shows for example that, using the 30mm time map, there are 11 reaches which reduce the largest flood peak by over 5 cumecs individually. It can be seen that with increased rainfall rate, more reaches have a reducing effect on the main flood peak - this is not suprising as for the higher rainfall rates initial flood peak is higher.

-NOTE- It is also important to note that there are also many reaches which increase the flood peak when a CRIM is added, and many more 'neutral' sites where the effect is insignificant. In addition to this, for various reasons some of the reaches may not be suitable for CRIMs even if the model shows that increasing roughness in that area has a positive effect on flood reduction.

For example an area of floodplain may all ready be heavily vegetated - therefore floodplain roughness cannot be increased in practice.

The results are encouraging - when looking at a qualitatively good simulation of the 2000 flood hydrograph, over 50 reaches can potentially reduce the main flood peak by over 0.1 cumecs individually.

However there are other points to consider when looking at these results;

I was quite surprised at the extent of the effect of applying CRIMs to certain reaches, largely though not exclusively located along the main Uck. Four reaches reduced the flood peak by over 10 cumecs on their own. This is an extremely large reduction.

This may be due to a model artefact. - As an additional analysis I am going to run the same simulations again but this time not increase the channel rough as much;

The default channel roughness (represented as manning's roughness, n) is 0.035;

For the results discussed here, n was increased to 0.14;

This could be potentially too high so I'll run the same simulations again, increasing channel n to 0.08. This will represent a less extreme intervention and I hope results will still be positive;

Secondly so far I have only analysed my results looking at flood peak reduction. It is not necessarily helpful to reduce the flood peak if the volume of water above critical flood discharge - the amount of water spilling onto the floodplain - remains the same over a storm event. (Though it may still be beneficial in terms of timing of flood waters)

Therefore I need to look at the volume of water above critical flood discharge. This is proving to be more difficult for me to do than hoped. I basically need to figure out how to work out the area under a section of the discharge curve. This appears to be a slightly complicated proceduce and one I haven't figured out yet.

After looking at the individual effect of adding CRIMs, I will need to look at how combinations of CRIMs affect the flood peak.

Images showing my initial results to follow....

Ed